It’s time to revisit this realtime visual synthesis tool, and build the next iteration on a modern platform. The last version was called “VS3” (for Visual Synthesizer, 3rd iteration); over the next few weeks I’ll be banging out an “alpha” VS4. Stay tuned. In the meantime, here’s the backstory.

It’s time to revisit this realtime visual synthesis tool, and build the next iteration on a modern platform. The last version was called “VS3” (for Visual Synthesizer, 3rd iteration); over the next few weeks I’ll be banging out an “alpha” VS4. Stay tuned. In the meantime, here’s the backstory.

This visual instrument concept was developed because typical VJ software tends to be more-or-less a cross between After Effects and Ableton, and I wanted an alternative. Why? Well, certainly not because I don’t love After Effects and Ableton (I use them regularly, of course). It’s just that I found this model limiting for creating the sorts of live visuals I had in mind. An analogy to a live audio mix might be appropriate: conventional VJ software is like an audio mixer and outboard effects – it allows you to mix and process, but to put live dynamically generated sounds into the mix you need an instrument like a synthesizer. For the live video sets I was doing, I didn’t just want to mix visuals with effects – I wanted to also create original footage, live. So I built a tool based on the architecture of a traditional music synthesizer: original imagery is created in a performance environment by selecting sources of various sorts (as one would select waveforms on a synthesizer) and by applying some sort of method (algorithm) to combine and/or modulate them. That’s the basic idea, of course there are many more subtleties in practice.

I come from a post-production background (I worked at Avid). But unlike a typical post-production environment (like a Media Composer), the VS3 system was designed to allow creative decisions to be made on-the-fly in a live performance. And as I mentioned, the VSx approach is also more advanced than the typical ‘VJ’ paradigm, as the system doesn’t just mix and apply effects to existing sources but generates original footage and motion graphics in real-time – not just abstract, procedural sorts of imagery but also sequences and composites involving specific visual semantics. So there’s the ability to straddle the worlds of traditional cutting where narrative is the main goal, and VJ-style mixing where real-time dynamics are essential.

The previous iteration: VS3

The first use of the VS3 system was in Boston, where I assembled a group of artists and musicians to create images and sounds in a live performance environment. The goal was to incorporate cinematic elements with live mixing, live images, and improvised music (including the dub rig, which we recently rebuilt here in LA).

This session was a rehearsal for a performance at Boston CyberArts Festival and Boston Independent Film Festival.

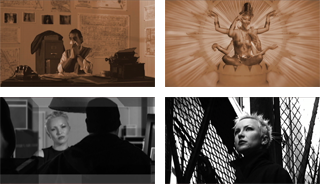

narrative elements …

The performance was to be dynamic, and improvised – but it wasn’t supposed to be merely an eye-candy VJ mix. It was also meant to tell a story. To further this, we scripted and shot a number of narrative scenes (using traditional cinematic language) to be used as sources in the live mix.

These were partially edited in post-production, with the final “cut” executed live. A somewhat non-traditional approach … amusingly, one audience member came up to me after the set and asked “Hey, what movie did you take those scenes from?” I shouldn’t have been too surprised, as many VJ’s rip footage from cinematic sources. But I might have replied, “There was no movie. You just saw the ‘movie’ – and that was the only time it will ever be seen (because next time it will be different)” …

… and abstract elements

The performance also incorporated a range of abstract visual elements – inspired to some degree by dada and surrealist film from the early 20th century, montage editing, as well as contemporary motion graphics – most of which were generated on the fly using the VS3 system. Some animated elements were driven by live musical cues such as notes on a keyboard or percussion hits.

The soundtrack was live and improvised using a combination of prerecorded loops and live electronic instruments, “dub”-style.